以redis server 为例

redis server 启动时调用bind() 传入文件描述符fd6 绑定端口6379,调用listen()监听端口,并通过accept() 等待连接

root@pmghong-VirtualBox:/usr/local/redis/bin# strace -ff -o /data/redis_strace/redis ./redis-server root@pmghong-VirtualBox:/proc/22330/fd# ls /data/redis_strace/ -l total 48 -rw-r--r-- 1 root root 34741 3月 14 10:37 redis.25102 -rw-r--r-- 1 root root 134 3月 14 10:37 redis.25105 -rw-r--r-- 1 root root 134 3月 14 10:37 redis.25106 -rw-r--r-- 1 root root 134 3月 14 10:37 redis.25107 root@pmghong-VirtualBox:/proc/22330/fd# vi /data/redis_strace/redis.25102 ... ... epoll_create(1024) = 5 socket(PF_INET6, SOCK_STREAM, IPPROTO_TCP) = 6 setsockopt(6, SOL_IPV6, IPV6_V6ONLY, [1], 4) = 0 setsockopt(6, SOL_SOCKET, SO_REUSEADDR, [1], 4) = 0 bind(6, {sa_family=AF_INET6, sin6_port=htons(6379), inet_pton(AF_INET6, "::", &sin6_addr), sin6_flowinfo=0, sin6_scope_id=0}, 28) = 0 listen(6, 511) = 0 fcntl(6, F_GETFL) = 0x2 (flags O_RDWR) fcntl(6, F_SETFL, O_RDWR|O_NONBLOCK) = 0 ... ... root@pmghong-VirtualBox:/proc/25102/fd# ll total 0 dr-x------ 2 root root 0 3月 14 12:05 ./ dr-xr-xr-x 9 root root 0 3月 14 10:37 ../ lrwx------ 1 root root 64 3月 14 12:28 0 -> /dev/pts/0 lrwx------ 1 root root 64 3月 14 12:28 1 -> /dev/pts/0 lrwx------ 1 root root 64 3月 14 12:05 2 -> /dev/pts/0 lr-x------ 1 root root 64 3月 14 12:28 3 -> pipe:[104062] l-wx------ 1 root root 64 3月 14 12:28 4 -> pipe:[104062] lrwx------ 1 root root 64 3月 14 12:28 5 -> anon_inode:[eventpoll] lrwx------ 1 root root 64 3月 14 12:28 6 -> socket:[104063] lrwx------ 1 root root 64 3月 14 12:28 7 -> socket:[104064] lrwx------ 1 root root 64 3月 14 12:28 8 -> socket:[256344]第一阶段:BIO(阻塞IO)

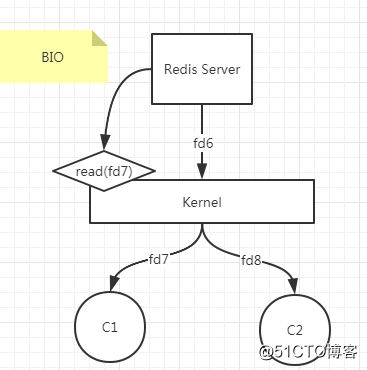

Redis Server 启动后通过文件描述符fd6 监听系统内核

Client1 / Client2 分别通过fd7,fd8 请求访问redis

在BIO的场景下,redis server 会调用read()方法并进入阻塞状态,也就是直到fd7 有请求过来,处理完才能处理其他请求

这个模式缺点很明显,就是阻塞IO导致效率低

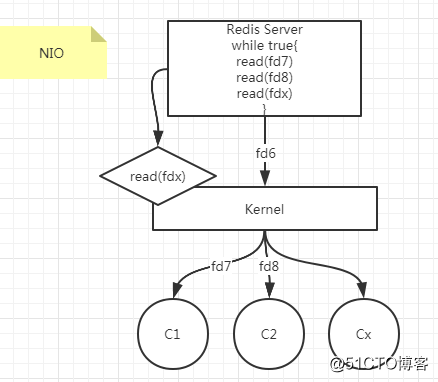

第二阶段 NIO (非阻塞IO)

跟BIO的区别在于,调用read(fd7) 时,如果没有请求数据,立即给redis server 返回一个错误

redis server 收到该类型的错误即可知道当前连接没有数据过来,可以继续处理下一个请求,提高处理效率

bind(6, {sa_family=AF_INET6, sin6_port=htons(6379), inet_pton(AF_INET6, "::", &sin6_addr), sin6_flowinfo=0, sin6_scope_id=0}, 28) = 0 listen(6, 511) = 0 fcntl(6, F_GETFL) = 0x2 (flags O_RDWR) fcntl(6, F_SETFL, O_RDWR|O_NONBLOCK) = 0该模式的问题在于,定时轮询调用read(fdx)系统调用,当多个client 请求过来时,需要频繁的进行内核态/用户态切换,上下文切换开销大

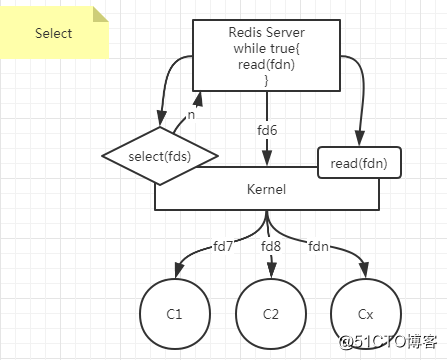

第三阶段 select 同步非阻塞

int select(int nfds, fd_set *readfds, fd_set *writefds, fd_set *exceptfds, struct timeval *timeout); select() and pselect() allow a program to monitor multiple file descriptors, waiting until one or more of the file descriptors become "ready" for some class of I/O operation (e.g., input possible). A file descriptor is considered ready if it is possible to perform a corresponding I/O operation (e.g., read(2) without blocking, or a sufficiently small write(2)).目标是同时监听多个fd,直到一个或者多个fd 进入ready 状态,才会调用read()等系统调用处理业务逻辑,而不像上述的NIO场景下,需要轮询调用x个read()

select 只能解决事件通知问题(即哪些进程能读,哪些不能读的问题),但到了内核态,仍需在内核中遍历x个fd,看哪个client 发生了IO,再通知select 把结果集返回给server端,接着由sever端向指定的fd发起read() 系统调用

第四阶段 epoll 多路复用

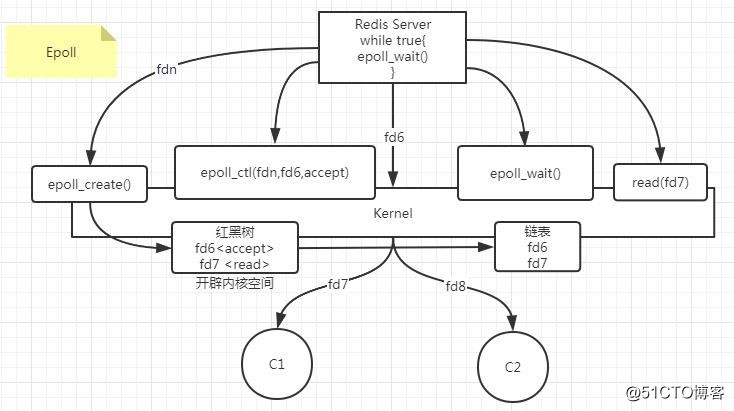

epoll 机制包括 epoll_create / epoll_ctl / epoll_wait 3个系统调用

// epoll_create // 说明 epoll_create() creates an epoll(7) instance. //函数签名 int epoll_create(int size); //返回值 On success, these system calls return a nonnegative file descriptor. On error, -1 is returned, and errno is set to indicate the error. //epoll_ctl //说明 This system call performs control operations on the epoll(7) instance referred to by the file descriptor epfd. It requests that the operation op be per‐ formed for the target file descriptor, fd. //函数签名 int epoll_ctl(int epfd, int op, int fd, struct epoll_event *event); // op 类型 EPOLL_CTL_ADD / EPOLL_CTL_MOD /EPOLL_CTL_DEL // 返回值 When successful, epoll_ctl() returns zero. When an error occurs, epoll_ctl() returns -1 and errno is set appropriately. //epoll_ctl //说明 The epoll_wait() system call waits for events on the epoll(7) instance referred to by the file descriptor epfd. The memory area pointed to by events will contain the events that will be available for the caller. Up to maxevents are returned by epoll_wait(). The maxevents argument must be greater than zero. //函数签名 int epoll_wait(int epfd, struct epoll_event *events, int maxevents, int timeout); //返回值 When successful, epoll_wait() returns the number of file descriptors ready for the requested I/O, or zero if no file descriptor became ready during the requested timeout milliseconds. When an error occurs, epoll_wait() returns -1 and errno is set appropriately.epoll_create(1024) = 5 ... ... bind(6, {sa_family=AF_INET6, sin6_port=htons(6379), inet_pton(AF_INET6, "::", &sin6_addr), sin6_flowinfo=0, sin6_scope_id=0}, 28) = 0 listen(6, 511) = 0 ... ... bind(7, {sa_family=AF_INET, sin_port=htons(6379), sin_addr=inet_addr("0.0.0.0")}, 16) = 0 listen(7, 511) = 0 ... ... epoll_ctl(5, EPOLL_CTL_ADD, 6, {EPOLLIN, {u32=6, u64=6}}) = 0 epoll_ctl(5, EPOLL_CTL_ADD, 7, {EPOLLIN, {u32=7, u64=7}}) = 0 epoll_ctl(5, EPOLL_CTL_ADD, 3, {EPOLLIN, {u32=3, u64=3}}) = 0 write(...) read(...) epoll_wait(5, [], 10128, 0) = 01、进程启动时通过epoll_create() 创建epoll instance,成功时返回一个非负数的fdn,失败返回-1还有错误码

2、调用epoll_ctl(上一步epoll_create 返回的fd,op,fd6,事件类型<accpet>)

3、调用epoll_wait() 监听内核事件,调用成功时返回该fd。例如当c1请求redisserver 时,首先需要通过fd6建立连接,此时通过epoll_ctl() 中对fd6 的accept()调用可以监听到该请求,并将fd6传给epoll_wait()

4、redis server端 从epoll_wait() 获取需要IO操作的fd,发现c1 通过fd6请求建立连接,为其分配fd7,并在epoll_ctl()注册一个监听,例如epoll_ctl(fdn,op, fd7, <read>)

通过上述的事件通知方式,可以解决select 中,内核态循环遍历所有fd的缺点,仅当接收到IO中断事件,才通知上层程序,提高工作效率。

- 版权声明:文章来源于网络采集,版权归原创者所有,均已注明来源,如未注明可能来源未知,如有侵权请联系管理员删除。